One of the benefits of generative AI is the ability to transform one media from text, to speech, to imagery to video. In this post Andy Tattersall explores one aspect of this ability, by transforming his archive written blogposts into a podcast format, Talking Threads, and discusses why and how this could be beneficial for research communication. … Read More →

Web platform can revolutionize the essay correction process

In search of an alternative to the laborious process of correcting essays, more specifically regarding identifying deviations from the theme in essays, researchers have developed a text feedback platform that simulates the National High School Examination (Exame Nacional do Ensino Médio, ENEM) guidelines and grades, the Corrector of Essays by Artificial Intelligence (Corretor de Redações por Inteligência Artificial, CRIA). The tool is already being used by students and education professionals. … Read More →

AI agents, bots and academic GPTs

Bots and academic GPTs are based on large language models, such as ChatGPT, designed and sometimes trained for more specific tasks. The idea is that by being specialized, they will deliver better results than “generic” models. This post presents some of the bots and academic GPTs. Available in Portuguese only. … Read More →

Large Language Publishing [Originally published in the Upstream blog in January/2024]

The New York Times ushered in the New Year with a lawsuit against OpenAI and Microsoft. OpenAI and its Microsoft patron had, according to the filing, stolen “millions of The Times’ copyrighted news articles, in-depth investigations, opinion pieces, reviews, how-to guides,” and more—all to train OpenAI’s LLMs. … Read More →

Does Artificial Intelligence have hallucinations?

AI applications have demonstrated impressive capabilities, including the generation of very fluent and convincing responses. However, LLMs, chatbots, and the like, are known for their ability to generate non-objective or nonsensical statements, more commonly known as “hallucinations.” Could it be that they are on drugs? Available in Spanish only. … Read More →

Can AI do reliable review scientific articles?

The cost of reviewing scientific publications, both in terms of money and time spent, is growing to unmanageable proportions with current methods. It is necessary to use AI as a trust system and thus free up human resources for research tasks. It would be important for SciELO to progressively incorporate AI modules for evaluation in its preprints server as a new advance and development of the technologies it manages. Available in Spanish only. … Read More →

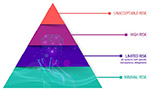

Research and scholarly communication, AI, and the upcoming legislation

Can AI be used to generate terrorist “papers”, spread deadly viruses, or learn how to make nuclear bombs at home? Is there legislation that can protect us? It looks like international regulation is on the way. … Read More →

AI: How to detect chatbox texts and their plagiarism

The ChatGPT-3 application is consulted on four topics under discussion for the production of academic texts acceptable to scientific journal editors. Each question is followed by the answer given by the OpenAI application itself and then by our evaluation, consulting recent sources published on the Internet. Finally, some (human) reflections are presented which, like all things, are subject to discussion or changes brought about by advances in technology. … Read More →

ChatGPT and other AIs will transform all scientific research: initial reflections on uses and consequences – part 2

In this second part of the essay, we seek to present some risks that arise particularly in the use of generative AI in the scientific field and in academic work. Although all the problems have not been fully mapped out, we have tried to offer initial reflections to support and encourage debate. … Read More →

ChatGPT and other AIs will transform all scientific research: initial thoughts on uses and consequences – part 1

We discuss some possible consequences, risks, and paradoxes of the use of AIs in research, such as potential deterioration of research integrity, possible changes in the dynamics of knowledge production and center-periphery relations in the academic environment. We concluded by calling for an in-depth dialog on regulation and the creation of technologies adapted to our needs. … Read More →

Artificial Intelligence and research communication

Are chatbots really authors of scientific articles? Can they be legally responsible, make ethical decisions? What do scientific societies, journal editors and universities say? Can their results be included in original scientific articles? Based on recent contributions hereby presented, we’ll be publishing posts that will try to answer these questions and any new ones that arise. … Read More →

GPT, machine translation, and how good they are: a comprehensive evaluation

Generative artificial intelligence models have demonstrated remarkable capabilities for natural language generation, but their performance for machine translation has not been thoroughly investigated. A comprehensive evaluation of GPT models for translation is presented, compared to state-of-the-art commercial and research systems, including NMT, tested with texts in 18 languages. … Read More →

It takes a body to understand the world – why ChatGPT and other language AIs don’t know what they’re saying [Originally published in The Conversation in April/2023]

Large language models can’t understand language the way humans do because they can’t perceive and make sense of the world. … Read More →

Recent Comments