In a previous post, this blog SciELO in Perspective tackled the issue of university rankings¹. Comment was made that some are international in scope (Academic Ranking of World Universities – ARWU, Times Higher Education – THE, QS Top Universities, Leiden Ranking, Webometrics and SCImago Institutions Ranking – SIR; others are regional in nature – taking in only European institutions (U-Map and U-Multirank); and Brazil has a national ranking for its universities known as The University Rankings of Folha – RUF.

The first national university rankings were published in the United States in 1983 by US News and World Report. Media organizations or independent agencies produce these university guides, by evaluating and sometimes classifying Institutes of Higher Education by means of a combination of qualitative and quantitative data². The proliferation of these rankings in the United States of America, driven by American culture, introduced “dinâmica de competitividade em sistemas nacionais, o que foi visto como uma influência (positiva) no comportamento institucional, capaz de levar à melhora da qualidade”³ (Hazelkorn 2010). The American experience was the inspiration behind international ranking systems, such as those mentioned above. But it was also the inspiration behind rankings of national scope, instigated by other countries, such as CHE-HochschulRanking, in Germany ; the Melbourne Institute International Standing of Australian Universities and the Good University Guide in Australia ; and the one produced by the Japanese daily paper Asahi Shimbun ; examples of the most recently launched rankings are the U-GR produced by the University of Grenada, in Spain ; the Ranking of Universities – Clase 13, in Mexico (launched in June 2013 in partnership with the Autonomous University of Mexico – UNAM, and the journal El Economista) ; and in Brazil, the University Ranking of Folha (RUF) , the first listing of Brazilian Institutes of Higher Education, produced by the newspaper Folha de São Paulo and launched in September 2012. It is now in its second edition, which was released at the start of this month.

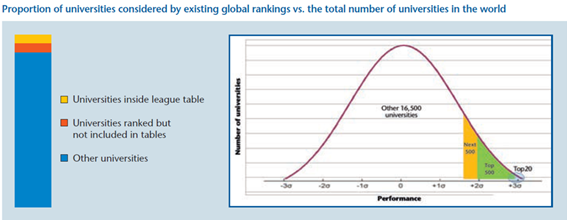

Given that the majority of world rankings include only around 1% to 3% of the institutions (200-500 universities) from the 17,000 or so institutes of higher education worldwide⁴, in excluding the majority of existing universities, these national ranking have their merit in positioning national institutions which would not normally appear in any listing. In the case of Brazil, it was inevitable that only two or three universities appear in the international rankings. With the RFU it was possible to expand the set 192 universities in the country in its 2013 edition. However, the methodology may yet be subject to criticism, for by including institutions of very different nature and purposes in the same listing – teaching universities and research universities – the RUF was innovative in adopting as its yardstick for academic output, in addition to the traditional international database Web of Science, sources that were more in tune with the Brazilian context, such as SciELO.

Image:

Source: Rauhvargers, 2011, p. 13

There is a growing emergence of disparate listings at different levels – international, regional and national – and this diversity shows that a process of standardization and harmonization of the different institutions by means of the criteria which have been adopted by the rankings is impossible.

According to the exhortation of Adam Habib : “estudos comparativos podem revelar muito e permitir lições a serem aprendidas a partir de experiências variadas só se não esquecerem do contexto”. ⁵ (Habib 2011)

Notes

¹ Indicadores de produtividade científica em rankings universitários: critérios e metodologias. SciELO em Perspectiva. [viewed 14 September 2013]. Available from: <http://blog.scielo.org/blog/2013/08/15/indicadores-de-produtividade-cientifica-em-rankings-universitarios-criterios-e-metodologias/>.

² HAZELKORN, E. Os rankings e a batalha por excelência de classe mundial: estratégias institucionais e escolhas de políticas. Rev. Ensino Superior UNICAMP, abr 2010, nº 1, p. 43-64. [viewed 12 September 2013]. Available from: <http://www.gr.unicamp.br/ceav/revistaensinosuperior/ed01_maio2010/pdf/Ed01_marco2010_ranckings.pdf>.

³ Translation: “a competitive drive in national systems which was viewed as a positive influence on institutional behavior, and capable of improving quality”.

⁴ RAUHVARGERS, A. Global university rankings and their impacts. Brussels: European University Association, 2011. (EUA Report on Rankings 2011). [viewed 12 September 2013]. Available from: <http://www.eua.be/pubs/Global_University_Rankings_and_Their_Impact.pdf>.

⁵ Translation: “comparative studies can reveal much and enable lessons to be learned from multifarious experiences only if we do not forget context”.

Referências

HABIB, A. A league apart. Times Higher Education, 13 out. 2011. [viewed 14 September 2013]. Available from: http://www.timeshighereducation.co.uk/417758.article#.Tphc9B–hbM.

HAZELKORN, E. Os rankings e a batalha por excelência de classe mundial: estratégias institucionais e escolhas de políticas. Rev. Ensino Superior UNICAMP, abr 2010, nº 1, p. 43-64. [viewed 12 September 2013]. Available from: <http://www.gr.unicamp.br/ceav/revistaensinosuperior/ed01_maio2010/pdf/Ed01_marco2010_ranckings.pdf>.

About Sibele Fausto

About Sibele Fausto

Collaborator on the SciELO program, post-graduate in Information Science from the School of Communication and the Arts of the University of São Paulo (PPGCI-ECA-USP), specialist in Health Sciences information at the Federal University of São Paulo in partnership with the Latin American Center for Health Sciences Information (UNIFESP-BIREME-PAHO-WHO), Sibele Fausto is a librarian in the Technical Department of the Integrated Library System of the University of São Paulo (DT-SIBi-USP).

Translated from the original in Portuguese by Nicholas Cop Consulting.

Como citar este post [ISO 690/2010]:

![Researchers engaging with policy should take into account policymakers’ varied perceptions of evidence [Originally published in the LSE Impact blog in January/2023] Illustration of a board, with wires connecting the elements.](https://blog.scielo.org/en/wp-content/uploads/sites/2/2023/01/Evidence-Perceptions-LSE-Impact_thumb.jpg)

Thank you for pointing out the difficulty of including higher ed institutions with different missions into a single ranking. They ought to be evaluated against their own missions and against similar institutions. That is what my colleagues and I are trying to do with U-Multirank: find a set of common indicators that can be helpful in comparing different kinds of performances (education, research, knowledge transfer) at different geographical scales (regional, international). All institutions will find some of these indicators relevant and other indicators not–we just provide the data in a (fairly) comparative way. The U-Multirank methodology should be attractive not just for the tail of the curve of highly-internationalised research universities, but also for, e.g., more teaching-oriented ones with a more regional focus. Besides, we try to do that not just for Europe, but aim to be inclusive world-wide (sorry, I want to set that misconception right). We’d love to learn how SciELO could help world-wide rankings like ours to be more inclusive of Brazilian higher education.