By Andrew Plume & Judith Kamalski

To date, the rise of alternative metrics as supplementary indicators for assessing the value and impact of research articles has focussed primarily on the article and/or author level. However, such metrics – which may include social media mentions, coverage in traditional print and online media, and full-text download counts – have seldom previously been applied to higher levels of aggregation such as research topics, journals, institutions, or countries. In particular, the use of article download counts (also known as article usage statistics) have not been used in this way owing to the difficulty in aggregating download counts for articles across multiple publisher platforms to derive a holistic view. While the meaning of a download, defined as the event where a user views the full-text HTML of an article or downloads the full-text PDF of an article from a full-text journal article platform, remains a matter of debate, it is generally considered to represent an indication of reader interest and/or research impact.

As part of the report ‘International Comparative Performance of the UK Research Base: 2013’, commissioned by the UK’s Department for Business, Innovation and Skills (BIS), download data were used in two different ways to unlock insights not otherwise possible from more traditional, citation-based indicators. In the report, published in December 2013, download data were used alongside citation data in international comparisons to offer a different perspective on national research impact, and were also used to give a unique view of knowledge exchange between authors and readers in two distinct but entwined segments of the research-performing and research-consuming landscape: the academic and corporate sectors.

Comparing national research impact using a novel indicator derived from article download counts

Citation impact is by definition a lagging indicator: newly-published articles need to be read, after which they might influence studies that will be, are being, or have been carried out, which are then written up in manuscript form, peer-reviewed, published and finally included in a citation index such as Scopus. Only after these steps are completed can citations to earlier articles be systematically counted. Typically, a citation window of three to five year following the year if publication is proven to provide reliable results. For this reason, investigating downloads has become an appealing alternative, since it is possible to start counting downloads of full-text articles immediately upon online publication and to derive robust indicators over windows of months rather than years.

While there is a considerable body of literature on the meaning of citations and indicators derived from them, the relatively recent advent of download-derived indicators means that there is no clear consensus on the nature of the phenomenon that is measured by download counts. A small body of research has concluded however that download counts may be a weak predictor of subsequent citation counts at the article level.

To gain a different perspective on national research impact, a novel indicator called field-weighted download impact (FWDI) has been developed according to the same principles applied to the calculation of field-weighted citation impact (FWCI; a Snowball metric). The impact of a publication, whether measured through citations or downloads, is normalised for discipline specific behaviours. Since full-text journal articles reside on a variety of publisher and aggregator websites, there is no central database of download statistics available for comparative analysis; instead, Elsevier’s full-text journal article platform ScienceDirect (representing some 16% of the articles indexed in Scopus) was used with the assumption that downloading behaviour across countries does not systematically differ between online platforms. However, t there is an important difference between FWCI and FWDI in this respect: the calculation of FWCI relates to all target articles published in Scopus-covered journals, whereas FWDI relates to target articles published in Elsevier journals only. The effect of such differences will be tested in upcoming research. In the current approach, a download is defined as the event where a user views the full-text HTML of an article or downloads the full-text PDF of an article from ScienceDirect; views of an article abstract alone, and multiple full-text HTML views or PDF downloads of the same article during the same user session, are not included in accordance with the COUNTER Code of Practice.

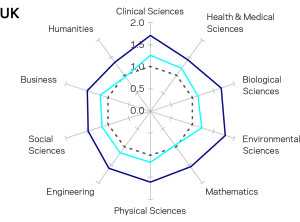

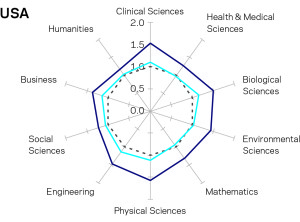

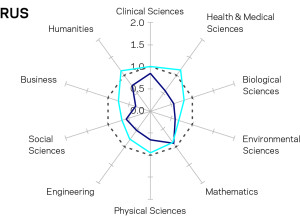

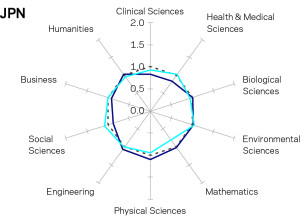

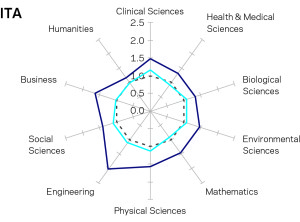

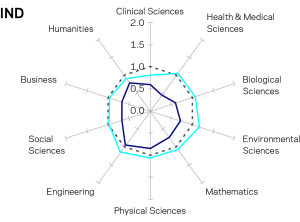

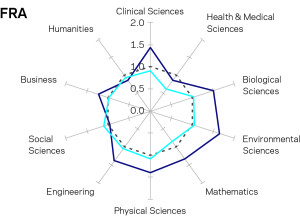

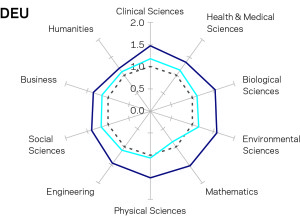

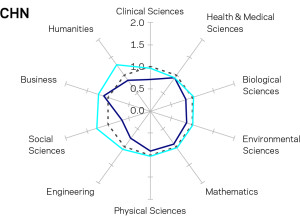

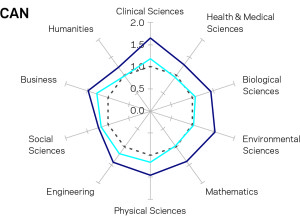

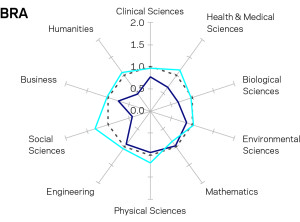

A comparison of the FWCI (derived from Scopus data) and FWDI in 2012 across 10 major research fields for selected countries is shown in Figure 1. The first point of note about the comparison is that typically, FWDI is more consistent across fields and between countries. It is possible that this observation may reflect an underlying convergence of FWDI between fields and across countries owing to a greater degree of universality in download behaviour (i.e. reader interest or an intention to read an article as expressed by article downloads) than in citation behaviour, but this is not possible to discern from analysis of these indicators themselves and remains untested.

Nonetheless, FWDI does appear to offer an interesting supplementary view of a country’s research impact; for example, the relatively rounded and consistent FWCI and FWDI values across fields for established research powerhouses such as the UK, USA, Japan, Italy, France, Germany and Canada contrasts with the much less uniform patterns of field-weighted citation impact across research fields for the emergent research nations of Brazil, Russia, India and China, for which field-weighted citation impact is typically lower and more variable across research fields than field-weighted download impact. This observation suggests that for these countries reader interest expressed through article downloads is not converted at a very high rate to citations. Again, this points to the idea that users download (and by implication, read) widely across the literature but cite more selectively, and may reflect differences in the ease (and meaning) of downloading versus citing. Another possible explanation lies in the fact that depending on the country, there may be weaker or stronger overlap between the reading and the citing communities. A third aspect that may be relevant here is regional coverage. Publications from these countries with a weak link between downloads and citations may be preferentially downloaded by authors from these same countries, only to be cited afterwards in local journals that are not as extensively covered in Scopus as English language journals.

![]()

Figure 1 — Field-weighted citation impact (FWCI) and field-weighted download impact (FWDI) for selected countries across ten research fields in 2012. For all research fields, a field-weighted citation or download impact of 1.0 equals world average in that particular research field. Note that the axis maximum is increased for Italy (to 2.5). Source: Scopus and ScienceDirect.

Examining authorship and article download activity by corporate and academic authors and users as a novel indicator of cross-sector knowledge exchange

Knowledge exchange is a two-way transfer of ideas and information; in research policy terms the focus is typically on academic-industry knowledge exchange as a conduit between public sector investment in research and its private sector commercialisation, ultimately leading to economic growth. Knowledge exchange is a complex and multi-dimensional phenomenon, the essence of which cannot be wholly captured with indicator-based approaches, and since knowledge resides with people and not in documents, much knowledge is tacit or difficult to articulate. Despite this, meaningful indicators of knowledge exchange are still required to inform evidence-led policy. To that end, a unique view of knowledge exchange between authors and readers in academia and corporate affiliations can be derived by analysis of the downloading sector of articles with at least one corporate author, and the authorship sector of the articles downloaded by corporate users.

Given the context of the ‘International Comparative Performance of the UK Research Base: 2013’ report, this was done on the basis of UK corporate-authored articles and UK-based corporate users. Again, ScienceDirect data was used under the assumption that downloading behaviour across sectors (academic and corporate in this analysis) does not systematically differ between online platforms.

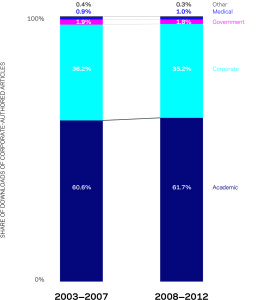

A view of the share of downloads of articles with at least one author with a corporate affiliation (derived from Scopus) by downloading sector (as defined within ScienceDirect) in two consecutive and non-overlapping time periods is shown in Figure 2. Downloading of UK articles with one or more authors with a corporate affiliation by users in other UK sectors indicates strong cross-sector knowledge flows within the country. 61.7% of all downloads of corporate-authored articles in the period 2008-12 came from users in the academic sector (see Figure 2), an increase of 1.1% over the equivalent share of 60.6% for the period 2003-07. Users in the corporate sector themselves accounted for 35.2% of downloads of corporate-authored articles in the period 2008-12, a decrease of -1.0% on the 36.2% share in the period 2003-07. Taken together, these results indicate high and increasing usage of corporate-authored research by the academic sector.

Figure 2 — Share of downloads of articles with at least one corporate author by downloading sector, 2003-07 and 2008-12. Source: Scopus and ScienceDirect.

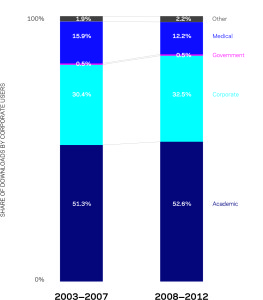

A view of the share of downloads of articles by users in the corporate sector (as defined within ScienceDirect) by author affiliation (derived from Scopus) in the same two time periods is shown in Figure 3. Downloading of UK articles by users in the UK corporate sector also suggests increasing cross-sector knowledge flows within the country. Some 52.6% of all downloads by corporate users in the period 2008-12 were of articles with one or more authors with an academic affiliation, and 32.5% were of articles with one or more corporate authors (see Figure 3). Both of these shares have increased (by 1.3% and 2.1%, respectively) over the equivalent shares for the period 2003-07, while the share of articles with at least one author with a medical affiliation downloaded by corporate users has decreased from one period to the next. Taken together, these results indicate high and increasing usage of UK academic-authored research by the UK corporate sector.

Figure 3 — Share of article downloads by corporate sector, 2003-07 and 2008-12. Shares add to 100% despite co-authorship of some articles between sectors owing to the derivation of shares from the duplicated total download count across all sectors. Source: Scopus and ScienceDirect.

Article downloads as a novel indicator: conclusion

In the ‘International Comparative Performance of the UK Research Base: 2013’ report, download data were used alongside citation data in international comparisons to help uncover fresh insights into the performance of the UK as a national research system in an international context.

Nevertheless, some methodological questions remain to be answered. Clearly, the assumption that download behaviours do not differ across platforms needs to be put to the test in future research. The analysis on the relationship between FWCI and FWDI showed how this differs from one country to another. The examples provided in for downloading publications from a different sector are focused on the UK solely, and should be complemented with views on other countries.

We envisage that the approaches outlined in this article, now quite novel, will one day become commonplace in the toolkits of those involved in research performance assessments globally, to the benefit of research, researchers, and society.

References

MOED, H.F. Statistical relationships between downloads and citations at the level of individual documents within a single journal. Journal of the American Society for Information Science and Technology. 2005, v. 56, pp. 1088-1097.

SCHLOEGL, C., and GORRAIZ, J. Comparison of citation and usage indicators: The case of oncology journals. Scientometrics. 2010, v. 82, pp. 567-580.

SCHLOEGL, C., and GORRAIZ, J. Global usage versus global citation metrics: The case of pharmacology journals. Journal of the American Society for Information Science and Technology. 2011, v. 62, pp. 161-170.

MOED. H.F. Citation Analysis in Research Evaluation. Dordrecht: Springer. 2005. pp. 81.

CRONIN, B. A hundred million acts of whimsy? Current Science. 2005, v. 89, pp. 1505-1509.

BORNMANN, L., and DANIEL, H. What do citation counts measure? A review of studies on citing behavior. Journal of Documentation. 2008, v. 64, pp. 45-80.

KURTZ, M.J., and BOLLEN, J. Usage Bibliometrics. Annual Review of Information Science and Technology. 2010, v. 44, pp. 3-64.

See http://www.snowballmetrics.com/.

External Links

Rise of alternative metrics – http://www.researchtrends.com/issue-35-december-2013/towards-a-common-model-of-citation-some-thoughts-o

International Comparative Performance of the UK Research Base: 2013 – http://info.scival.com/research-initiatives/BIS2013

Scopus – http://www.scopus.com/

Snowball metric – http://www.snowballmetrics.com/

Science Direct – http://www.sciencedirect.com/

COUNTER Code of Practice – http://www.projectcounter.org/code_practice.html

Original article in English

http://www.researchtrends.com/issue-36-march-2014/article-downloads/

Como citar este post [ISO 690/2010]:

![Where to start with AI in research management [Originally published in the LSE Impact blog in December/2024] Image generated by Google DeepMind. The image has a purple background and you can read “How do large language models work?” with a brief description below.](https://blog.scielo.org/en/wp-content/uploads/sites/2/2024/12/AI-Research-Magagement-LSE-Impact-1-150x150.jpg)

![Funders support use of reviewed preprints in research assessment [Originally published by eLife in December/2022] eLife logo](https://blog.scielo.org/en/wp-content/uploads/sites/2/2022/11/eLife-logo_thumb.jpg)

![Tracing the origins of ‘publish or perish’ [Originally published in the LSE Impact blog in July/2024] A painting by John N. Rhodes called “Study of a Skull, a Book and a Scroll of Paper” shows a human skull resting on a piece of paper wrapped around a brown cover book with a worn spine, so that you can see the seams.](https://blog.scielo.org/en/wp-content/uploads/sites/2/2024/07/WYL_LMG_027_27-001_thumb.jpg)

![How to translate academic writing into podcasts using generative AI [Originally published in the LSE Impact blog in June/2024] Image of a work of art made up of several lilac letters in a formation that looks like a cloud, generated by Google DeepMind](https://blog.scielo.org/en/wp-content/uploads/sites/2/2024/06/Text-To-Speech-LSE-Impact_thumb.png)

Recent Comments