By Jan Velterop

Every scientist is likely to be familiar with this quote by Isaac Newton: “If I have seen further, it is by standing on the shoulders of giants1”. He was not the first to express the concept, which has been traced to the 12th century, and attributed to Bernard of Chartres. And even he may have been inspired by Greek mythology.

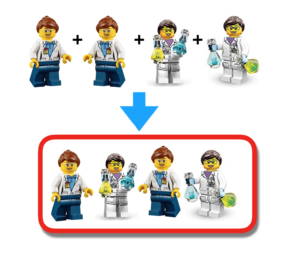

But if scientists nowadays are able to see further, it is because they are standing on a whole pyramid of colleague-scientists. And that is just the ones focusing on a particular discipline. Those who seek inter- or multi-disciplinary applicability of scientific findings need to straddle several such pyramids. The glory and career prospects of reaching such heights are great. But it doesn’t happen to too many scientists anymore. One reason is that an increasing amount of research is being done – and published – by groups of scientists rather than by lone individuals. Multi-author articles are on the rise, and have been for a while. According to Eldon Shaffer2, single authors wrote the vast majority (>98%) of important medical articles a century ago, which has become a rarity; <5% are now single authored.

The quest for glory is becoming more difficult as a result. With two or three authors, the authors can still extract points for their place in the pecking order of the scientific ego-system, but when there are more authors, it becomes complicated. But is this problematic? Isn’t the problem the ego-system itself? The pressure to publish and being able to put credits on one’s Curriculum Vitae – preferably in the form of publications in high impact factor journals – is relentless.

The negative consequences of that should not be underestimated: exaggerated claims, p-value fetishism, data selectivity, or even fraud, not always caught by peer-review or post-publication scrutiny. If they are not caught before publication but only post-publication, it is not an exaggeration that this undermines trust in the science communication system and leads to mistrust in science as a whole. Mistrust by the general public, but, perhaps more importantly, by politicians and opinion leaders. This is disastrous for society as a whole.

The chasing of citations and impact factors is problematic. For society, albeit not necessarily for science itself, according to Daniele Fanelli, who is quoted in BuzzFeed News (who are reporting on his PNAS article) that “our main conclusion is that you can’t say bias is hampering science as a whole”3-4. In the same BuzzFeed article, he also quoted as saying the following:

“Part of the reason why highly cited articles might be less reliable, is that surprising, exciting results tend to be more highly cited – but, of course, one reason that a result might be surprising is that it’s wrong, or at least overestimated. Sometimes a study will ‘find’ some dramatic effect just by statistical fluke, and cause great excitement, only for later studies to look at it and find something less impressive. We do have some evidence that that’s true. We found consistently that the first studies [of a phenomenon] find something strong and significant, but subsequent studies show something else”.

(This is generally described as “regression to the mean”).

Quoting Fanelli again:

The papers most likely to show bias were small studies with few participants – unsurprisingly, since larger studies have less random ‘noise’ in the data. There are perfectly good reasons to do small studies: it might make sense in a preliminary study, before you invest in a large study. The trouble is that that other scientists – and, especially, science reporters – may treat these small, pathfinder studies as trustworthy. The responsible, scientifically accurate way to report on science is to be aware of the background literature and put it into that context. If it’s reporting evidence of an exciting new finding, it should be treated with great caution. If it’s the fifth big study and they consistently report a phenomenon, you can be surer”.

How to improve the situation and create more trust in the system? Getting rid of the pressure to publish in prestige journals would help greatly. That pressure induces the temptation to flatter research results, or worse. Sometimes by leaving out data that do not support the hypothesis, sometimes by over-egging the results, sometimes by outright fraud. Because authors are not judged on the quality of their research, but on the cite-ability of their publications. The two are not the same. And the judgments they receive determine their funding and career prospects. So it’s understandable that authors focus on ‘sexing up’ their results; making them as spectacular as they can get away with, in order to achieve the holy grail: publication in a prestigious journal. I have quoted Napoleon Bonaparte on a number of occasions (though the quote is likely apocryphal): “You can offer people money and they wouldn’t risk their lives for it. But give them a ribbon, and they do anything you want them to do”. Carry the idea over to the scientific realm, and – somewhat unfairly, perhaps – it translates as “no rational scientist would put themselves at risk for principle, but offer them the possibility of being published in a prestige journal, and there’s no limit to what they’ll do to jazz up their results”.

How does one get rid of this ‘ego-systemic’ pressure that encourages all this, and consequently provides ammunition to those who undermine trust in science? Daniël Lakens offered an interesting insight in a recent interview on Dutch radio (he may still publish it on his blog, but hasn’t yet at the time of writing this – his latest blog entry5, of 4 August 2017, does address a collective approach in data gathering, though). He argued that too much ego could possibly be suppressed by larger scale collaborations. I think he is right. Larger scale collaborations dilute the ego of individual authors. If the collaborations themselves were given a name and cited, and not the authors (not even the first author in the usual construction “Joe Bloggs, et al”), it also would be easier to acknowledge the roles of everyone involved in the research in a note at the end of an article. Not just those who actually wrote the article, but also those who contributed substantially to the study’s conception and design, to the gathering of data, to the analysis (including statistics) and interpretation of the data, to the critical assessment of the content, et cetera (Ernesto Spinak discussed author credit on the SciELO in Perspective blog in 2014). And those who inspired the research, or played an important personal role in any discussions about the subject – the sort of people sometimes rewarded with a ‘guest’ or ‘honorary’ authorship – could be mentioned in such a note as well, of course.

I am repeating the title of this blog entry: Science is largely a collective enterprise. That collectivity needs to be recognized more explicitly.

Notes

1. Standing on the shoulders of giants [online]. Wikipedia. 2017 [viewed 07 August 2017]. Available from: http://en.wikipedia.org/wiki/Standing_on_the_shoulders_of_giants

2. SHAFFER, E. Too many authors spoil the credit. Can J Gastroenterol Hepatol. [online]. 2014, vol. 28, no. 11, pp. 605 [viewed 07 August 2017]. Available from: http://www.ncbi.nlm.nih.gov/pmc/articles/PMC4277173/

3. CHIVERS, T. The More Widely Cited A Study Is, The More Likely It Is To Exaggerate [online]. BuzzFeed News. 2017 [viewed 07 August 2017]. Available from: http://www.buzzfeed.com/tomchivers/the-more-widely-cited-a-study-is-the-less-reliable-it-is

4. FANELLI, D, COSTAS, R and IOANNIDIS, J. P. A. Meta-assessment of bias in science. PNAS [online] 2017, vol. 114, no. 143714-3719 [viewed 07 August 2017]. DOI: 10.1073/pnas.1618569114. Available from: http://www.pnas.org/content/114/14/3714

5. LAKENS, D. Towards a more collaborative science with StudySwap [online]. The 20% Statistician. 2017 [viewed 07 August 2017]. Available from: http://daniellakens.blogspot.com.br/2017/08/towards-more-collaborative-science-with.html

6. SPINAK, E. Author credits… Credited for what? [online]. SciELO in Perspective, 2014 [viewed 07 August 2017]. Available from: http://blog.scielo.org/en/2014/07/17/author-credits-credited-for-what/

References

Bernard of Chartres [online]. Wikipedia. 2017 [viewed 07 August 2017]. Available from: http://en.wikipedia.org/wiki/Bernard_of_Chartres

Cedalion [online]. Wikipedia. 2017 [viewed 07 August 2017]. Available from: http://en.wikipedia.org/wiki/Cedalion

CHIVERS, T. The More Widely Cited A Study Is, The More Likely It Is To Exaggerate [online]. BuzzFeed News. 2017 [viewed 07 August 2017]. Available from: http://www.buzzfeed.com/tomchivers/the-more-widely-cited-a-study-is-the-less-reliable-it-is

FANELLI, D, COSTAS, R and IOANNIDIS, J. P. A. Meta-assessment of bias in science. PNAS [online] 2017, vol. 114, no. 143714-3719 [viewed 07 August 2017]. DOI: 10.1073/pnas.1618569114. Available from: http://www.pnas.org/content/114/14/3714

LAKENS, D. Towards a more collaborative science with StudySwap [online]. The 20% Statistician. 2017 [viewed 07 August 2017]. Available from: http://daniellakens.blogspot.com.br/2017/08/towards-more-collaborative-science-with.html

REGALADO, A. Multiauthor papers on the rise. Science [online]. 1995, vol. 268, no. 5207, pp. 25 [viewed 07 August 2017]. DOI: 10.1126/science.7701334. Available from: http://science.sciencemag.org/content/268/5207/25.1.long

SHAFFER, E. Too many authors spoil the credit. Can J Gastroenterol Hepatol. [online]. 2014, vol. 28, no. 11, pp. 605 [viewed 07 August 2017]. Available from: http://www.ncbi.nlm.nih.gov/pmc/articles/PMC4277173/

SPINAK, E. Author credits… Credited for what? [online]. SciELO in Perspective, 2014 [viewed 07 August 2017]. Available from: http://blog.scielo.org/en/2014/07/17/author-credits-credited-for-what/

Standing on the shoulders of giants [online]. Wikipedia. 2017 [viewed 07 August 2017]. Available from: http://en.wikipedia.org/wiki/Standing_on_the_shoulders_of_giants

TSCHARNTKE, T. Author Sequence and Credit for Contributions in Multiauthored Publications. PLoS Biol [online]. 2007, vol. 5, no. 1, e18 [viewed 07 August 2017]. DOI: 10.1371/journal.pbio.0050018. Available from: http://journals.plos.org/plosbiology/article?id=10.1371/journal.pbio.0050018

External link

The 20% Statistician – <http://daniellakens.blogspot.nl/>

About Jan Velterop

Jan Velterop (1949), marine geophysicist who became a science publisher in the mid-1970s. He started his publishing career at Elsevier in Amsterdam. in 1990 he became director of a Dutch newspaper, but returned to international science publishing in 1993 at Academic Press in London, where he developed the first country-wide deal that gave electronic access to all AP journals to all institutes of higher education in the United Kingdom (later known as the BigDeal). He next joined Nature as director, but moved quickly on to help get BioMed Central off the ground. He participated in the Budapest Open Access Initiative. In 2005 he joined Springer, based in the UK as Director of Open Access. In 2008 he left to help further develop semantic approaches to accelerate scientific discovery. He is an active advocate of BOAI-compliant open access and of the use of microattribution, the hallmark of so-called “nanopublications”. He published several articles on both topics.

Jan Velterop (1949), marine geophysicist who became a science publisher in the mid-1970s. He started his publishing career at Elsevier in Amsterdam. in 1990 he became director of a Dutch newspaper, but returned to international science publishing in 1993 at Academic Press in London, where he developed the first country-wide deal that gave electronic access to all AP journals to all institutes of higher education in the United Kingdom (later known as the BigDeal). He next joined Nature as director, but moved quickly on to help get BioMed Central off the ground. He participated in the Budapest Open Access Initiative. In 2005 he joined Springer, based in the UK as Director of Open Access. In 2008 he left to help further develop semantic approaches to accelerate scientific discovery. He is an active advocate of BOAI-compliant open access and of the use of microattribution, the hallmark of so-called “nanopublications”. He published several articles on both topics.

Como citar este post [ISO 690/2010]:

Read the comment in Spanish, by julio cesar:

http://blog.scielo.org/es/2017/08/11/la-ciencia-es-fundamentalmente-un-emprendimiento-colectivo-esta-colectividad-debe-ser-reconocida-mas-explicitamente/#comment-41184

Read the comment in Portuguese, by José Joaquín Lunazzi:

http://blog.scielo.org/blog/2017/08/11/a-ciencia-e-fundamentalmente-um-empreendimento-coletivo-esta-coletividade-deve-ser-reconhecida-mais-explicitamente/#comment-27778